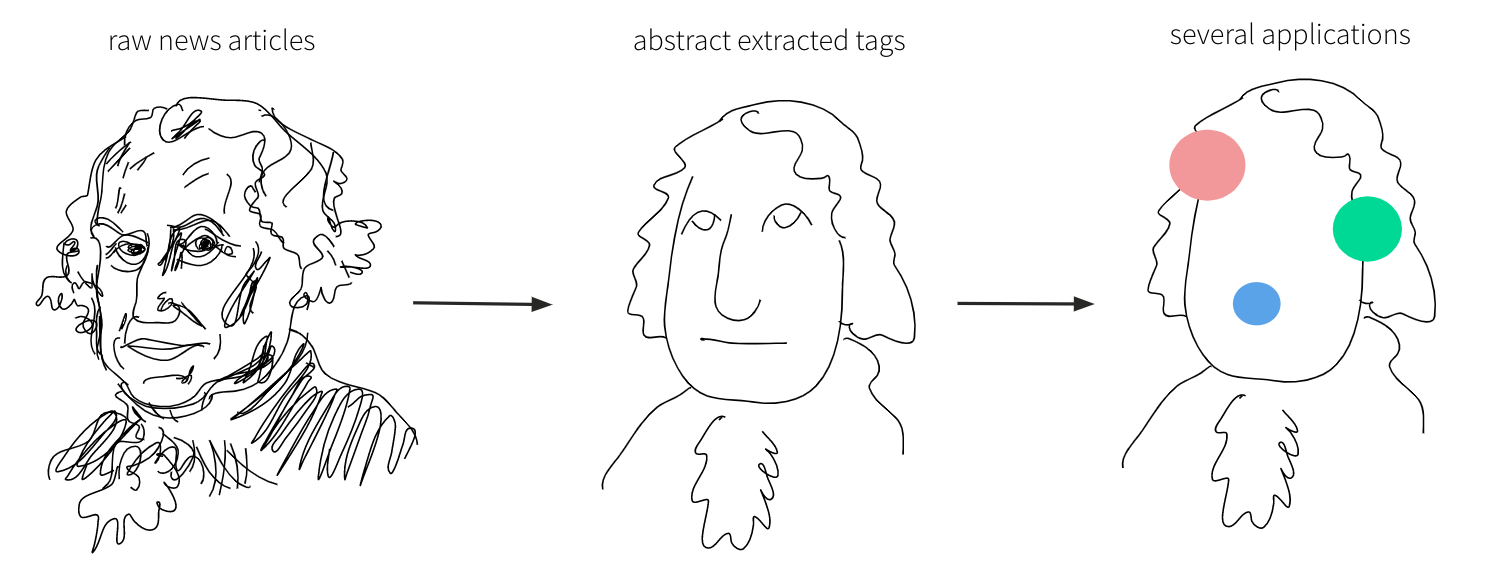

One way to apply machine learning, the most common form of AI nowadays, is to split its application into two steps:

1) In a first technical step, the objects of interest – for instance, news articles for media houses – are processed into a structured format;

2) While in a second heuristic step, this structured format is exploited for various applications. A natural application is to search for similar news articles.

We illustrate this process in Figure 1:

Figure 1: First abstract objects – for instance, raw news articles – and then use these abstractions – for instance, extratcted tags – in several applications.

Figure 1: First abstract objects – for instance, raw news articles – and then use these abstractions – for instance, extratcted tags – in several applications.

A popular approach to give structure to news articles is to automatically assign tags. Tags can be broadly classified as follows:

1) Tags that are directly contained in the text, for example the name of a person – say, 'Joe Biden';

2) Abstract tags that are not necessarily explicit in the text. For example, a topic like 'Politics';

3) Meta-information like a manually-assigned 'Evergreen' tag.

The tags of the first type can be dealt with by classic Named Entity Recognition (NER) algorithms. Common subclasses are “People”, “Organisations”, and “Locations”. On the other hand, the second class of tags detailed above requires some specifically-trained classification algorithms. Finally, the last group (which is not used at TX Group yet) would require an additional automatic or semi-automatic process that will depend on the type of meta-information. For instance, we have seen in earlier sections that there are many ways to define evergreen content.

Searching for articles

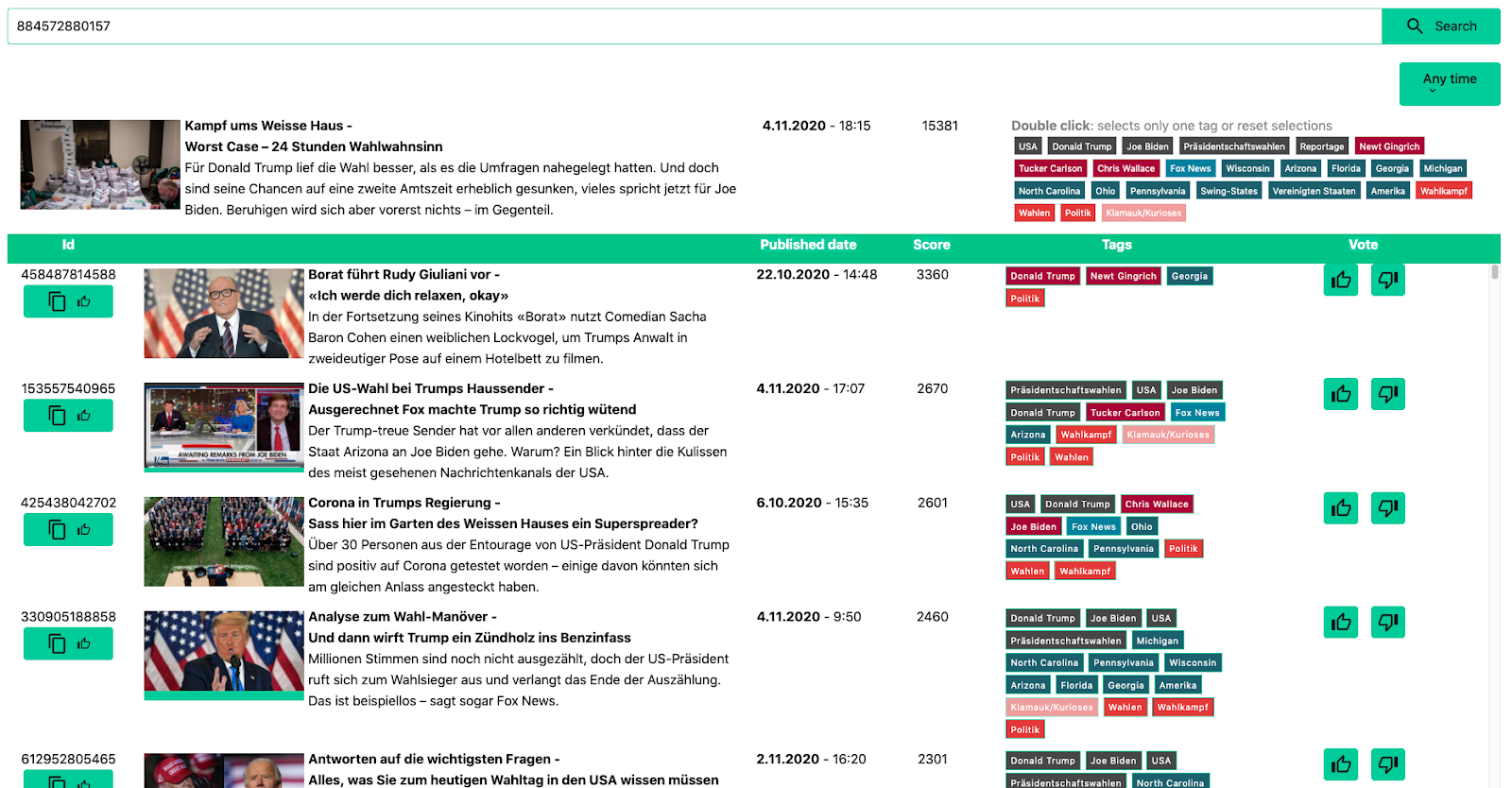

The main benefit of merging combining different tags in the same solution lies in the possibility to use them in a unified search procedure. At TX Group, we are currently using the following logic in a beta test:

1) Given a specific article, we score all other articles published within a reasonable time window according to their similarity to the first one, with respect to tag overlap. That is, we assign some points for each joint tag, and then compute the score by simply summing up the points.

2) A major improvement is to assign points according to the frequency of a tag. For instance, if a tag is very common like 'Politics', it should correspond to fewer points than a more specific one like the name of an individual. To deal with this, we use a so-called Term-Inverse Frequency strategy: If a tag appears in only one article every 100, it receives 1/100 points on the similarity score. So the more frequent a tag is, the less important it is for the score.

3) Another positive adjustment is to linearly scale the points according to the number of occurrences of a tag, which applies only to the first group of tags described above. For instance, if two articles contain the name 'Joe Biden' several times, then the assigned points are scaled accordingly.

Figure 2 shows a screenshot of our current search GUI (graphical user interface). Our goal is to integrate this more tightly into the journalistic production process.

Figure 2: Search GUI at TX Group based on tags. The different colours correspond to the different classes and subclasses of tags described above

Figure 2: Search GUI at TX Group based on tags. The different colours correspond to the different classes and subclasses of tags described above

Technical Implementation

To power this process, a NER-tagger is required to create the tags of the first class. As already mentioned, NER stands for Named Entity Recognition, an NLP technique to extract keywords from a text that has a special meaning, like names of people. There are many paid APIs on the market that cover this niche. The ones we tested provide quite good results: Rosette is a good example. On the other hand, there are also reasonably good Python packages that provide this functionality. Arguably the most popular ones are spaCy and Flair:

spaCy

- PRO: most popular NLP package, runs very fast

- CON: limited quality for NER in our test cases

Flair

- PRO: acceptable quality and good multi-language support

- CON: quite new and far slower

In the end, since we favoured quality over speed, we settled on Flair for our NER tagging process.

On the other hand, to classify articles by creating tags of the second class, we use a standard neural network which encodes articles using word vectors. As written above, the integration of class-3 tags is still an open challenge at TX-Group – which our journalists are asking us to solve.

Thanks to Tim Nonner, Chief Data Scientist at TX Group, for contributing to the report with this case study.