Immunity testing has been touted as one of the best ways to escape lockdown, but just how accurate will these tests have to be? Richard Bradley and Liam Kofi Bright look at inductive risk and policy-making during the pandemic.

As debate shifts in many parts of the world from the question of how to respond to an emerging pandemic, to that of how to exit from the lockdown imposed to slow down the transmission of the virus, the potential of antibody tests for immunity is receiving increasing attention. Some emphasise that widespread immunity testing and “passporting” of the immune offers the best prospect of restarting the economy, at least until a vaccine becomes widely available. Chile for instance has begun issuing immunity certificates and China uses a system of colour coding to sort citizens according to whether or not they have permission to be about town.

Antibody testing divides the population into those that are immune to the virus and who can therefore resume participation in economic and social life without fear and those that are not and who remain vulnerable and therefore must only participate in a very restricted way. If it works it thereby allows economic activity to resume to the extent that is consistent with keeping people safe, improving the wellbeing of those who have immunity and, in the longer term by reducing the economic cost of the pandemic, those who do not.

Others are less sanguine about the benefits of immunity testing and passporting, emphasising the many problems with it: many of the available tests are insufficiently accurate, there is still uncertainty as to how long immunity will last and hence how frequently people will need to be tested, and policies based on immunity status may lead to new class divisions between the have and the have-nots. The WHO for instance recently warned against using antibody tests in order to determine who could return to work or travel.

If a reasoned assessment of these competing positions is to be made, we need to start by being clear about how we should understand the results that antibody tests give us. What we want from a test of any kind is that they should be accurate, and reliably so. Accuracy is a matter of two things: sensitivity and specificity. The sensitivity of a test for some condition C is a measure of how good it is at telling that someone has C when they do in fact have it. The specificity of a test for C is a measure of how good it is at telling that someone doesn’t have C when they don’t in fact have it. These are typically measured by the conditional probability, respectively, of a positive verdict on C given that C holds and a negative verdict, given that it doesn’t. Sensitive tests avoid so-called “false negatives”, a verdict that someone is free of the condition when they are not, while specific tests avoid “false positives”, a verdict that someone has the condition when they do not in fact do so.

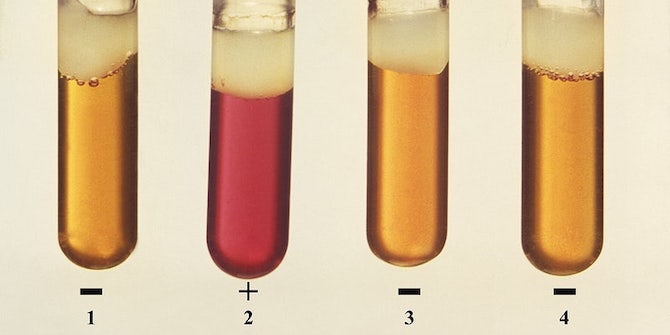

There is always some trade-off in developing a test between sensitivity and specificity. A “test” that always reported that someone had the condition would have a sensitivity of one – the perfect score – but would perform badly on the specificity side. And vice versa. Obviously, these wouldn’t be good tests. But even the best designed ones have to deal with the fact that both the conditions being measured and the evidence for it can be graded and ambiguous. Antibody (or serological) tests aim at answering the question “does this person have immunity?”. They typically do so by measuring the levels of immunoglobulin G (IgG) antibodies in someone’s blood, with this measurement then being used to determine a verdict. But clearly there is some latitude in deciding what level of IgG constitutes being immune. Set the level low and you will ensure you have a sensitive test but at the expense of specificity. Set it too high and you will get a lot of false negatives.

In the controversy around immunity testing, questions have been asked about whether they are sufficiently accurate. The main concern of the critics is the specificity of these test. One reason is that even if the specificity of the test is very high, if the percentage of the population that is immune is small then the test will produce a lot of false positives. Suppose for example we have a group of 1000 people of which 10% have immunity – 100 people. Suppose we perform an immunity test with a sensitivity and specificity of 0.9. Then 90 people with immunity will be correctly identified as such (i.e. 90% of the 100 with immunity) and 90 will be incorrectly identified as immune, even though they are not (i.e. 10% of the 900 people who don’t have immunity). So half of those identified as immune will not be. This is clearly not good enough if we want to use immunity testing as a basis for policies such as passporting the immune.

Things look better if a higher percentage of the population has immunity. At 20% for instance the same test would yield 80 false positives versus 160 true positives. And at 40% it would yield 60 false ones versus 360 true ones. In fact we don’t know for sure what the true rate of immunity is (we need widespread and accurate antibody testing for that!) but it seems implausible that it is much above 20% anywhere except places that have had very high levels of infection (both Sweden and New York currently stand at around 20% for instance). Things also look better if tests have sensitivities and specificities of 0.998, as has been claimed for some recently developed ones. Even if only 10% of the population is infected such tests would yield no more than 9 false positives per 1000 people infected versus 99 true positives. So less than 10% of those declared immune would not be.

A second issue concerns the reliability of serological tests i.e. about how consistently they perform at their claimed accuracy. There are firstly concerns about the plausibility of claims some manufacturers make about the accuracy of their tests and about the rigour of the procedure used to establish them. (Early experience with antibody tests was disappointing, with governments ordering large quantities of antibody tests only to discover that they were very inaccurate.) But even when the accuracy claims can be trusted, there are reasons for uncertainty about their performance outside of the laboratory. For obvious reasons laboratory tests are typically conducted on individuals who are either known not to be infected or who had been infected under controlled circumstances with a relatively high viral load and sufficiently far in the past for antibodies to have built up. But “in the wild” tests will be performed on individuals infected with low loads of the virus, perhaps quite recently, who won’t be reported as immune, and others who have been infected with viruses similar to SARS-CoV-2 that will be. And then there are concerns about how accuracy varies with the conditions under which the test is performed: where and by whom (a supermarket car park test conducted by a trainee may not be the same as a laboratory test conducted by a researcher). All of this can lead to lack of confidence in a test with high reported accuracy.

How accurate (in particular, how specific) do antibody tests have to be in order for us to safely use them? How confident do we have to be in their accuracy? These questions, although much debated, cannot be answered. More exactly, they should not be answered in isolation from the purpose to which the tests will be put. It’s one thing to use immunity tests to assess levels of immunity in the population in order to answer a question like “Can we open schools?”. It’s another to use them to determine whether a particular individual is immune in order to answer a question like “Can we safely employ this person in a care home?”. For instance, suppose we thought it would be safe to open schools when 40% of the population was immune. If immunity was in fact at that level, a test with sensitivity and specificity of 0.9 would report that 42% were immune, which is close enough. Even if the test turned out to be unreliable and actual immunity was 5% lower than it reported, that may not mean that opening schools would lead to disaster. On the other hand, if the same test were used to determine whether someone was safe to employ in a care home, the potential risk to the inhabitants of the care home may make it unwise to depend on a test with specificity of 0.9.

The problem we face in this scenario is familiar to philosophers of science as the problem of “inductive risk” – the world, independent of our input, does not settle how confident we must be in our claims before we may rationally accept them for purposes of making a decision. Plausibly, so a classic argument goes, in order to decide this we must know what is at stake in a given piece of inquiry. The higher the stakes the lower our tolerance should be for unreliability. And just as in our example, the particular nature of the stakes may make it salient whether we should be concerned more for specificity or sensitivity. This is a matter of moral appraisal, of what risks we think are socially acceptable to take, and who should bear the brunt of those risks given how they will be distributed to different sections of the population.

And this is where we think it is important from a public deliberation point of view to make the nature of this choice clear. The authors believe that in a democracy the appropriate way of deciding what sort of risks we are willing to tolerate is by putting the matter up for public debate and deliberation. This should not be framed as a question for technocratic expertise to simply resolve by fiat – no doubt there are difficult and important technical questions to be resolved here, but as we have argued there are inevitably also significant moral questions that must also play a role in deciding what sort of testing regime would be appropriate.

As it stands we fear that these decisions are being made largely without public input, with policies simply announced and reassurances given about the reliability of tests. Perhaps it is inevitable in a crisis that decisions have to be made quickly and with less than full consultation. But we hope to have motivated the thought that it is not enough for policy decisions to be based on the best available science. It should also be based on an understanding of what risks an informed public is willing to bear.

By Richard Bradley & Liam Kofi Bright

Richard Bradley is Professor of Philosophy at the London School of Economics and Political Science. He works mainly on decision making under uncertainty, but has broad research interests in formal epistemology, value theory, social choice and philosophy of language (especially conditionals). His recent book “Decision with a Human Face” attempts to provide a theory of rational belief attitude formation and decision making for agents that face uncertainty taking a variety of forms and that are aware of their own bounds

Liam Kofi Bright is an assistant professor of philosophy at the London School of Economics. His website can be found here: https://www.liamkofibright.com/

Featured image: Public Domain