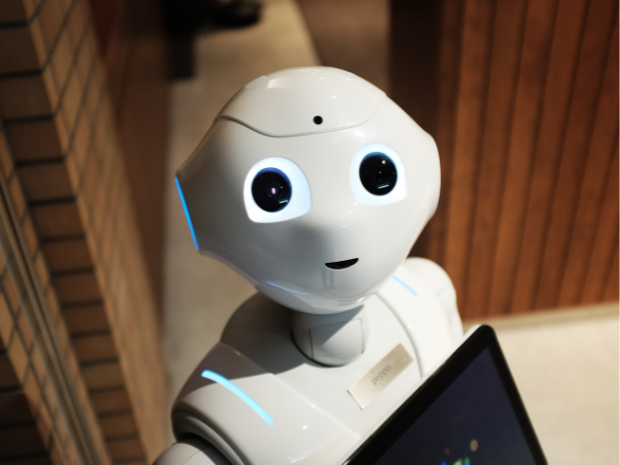

As we move further into the age of AI, organisations must be aware of the unintended consequences of making robots appear ever more human.

A new LSE-led study, recently published in the Journal of Consumer Psychology, shows that the level of humanlike traits present in AI powered robots and virtual assistants has a significant influence of people’s perceptions and behaviours towards other humans.

By conducting a series of psychological studies, Dr Kim and her co-author Ann L. McGill, Sears Roebuck Professor of General Management, Marketing and Behavioral Science at the University of Chicago Booth School of Business, found that, when people perceive AI as having humanlike qualities, they assimilate their view of humans toward their view of AI, leading to dehumanisation and even mistreatment of real persons in workplaces.

In one study, participants who saw robots display emotional behaviours, such as dancing, were more likely to support inhumane workplace practices, such as replacing meals with shakes, or capsule dormitories for workers.

In another study, participants who were exposed to emotionally intelligent AI were less likely to avoid companies known for mistreating employees, providing direct evidence of humanness assimilation between AI and humans.

However, a third study showed the inverse effect. When robots had extreme, non-humanlike capabilities, people saw real people as more human, reducing dehumanisation. This suggests that perceived similarity between humans and AI is crucial for assimilation of behaviours.

“When AI seems emotionally and socially humanlike, people may start treating real humans more like machines—less deserving care and respect,” said Dr Kim. “As AI becomes more humanlike through seemingly neutral technological advances, it can quietly reshape how we see and treat one another.”

The research offers theoretical insights in the study of employee mistreatment, introducing technology-induced dehumanisation as a new pathway to this behaviour. It also offers valuable practical insights for companies utilising emotionally intelligent AI—that they should be cautious of unintended negative effects on how consumers treat human employees.

To mitigate dehumanisation of real people, organisations and companies should emphasise the distinctiveness of AI from humans, highlight the non-human capabilities of AI, and use explicit cues to remind consumers they are interacting with real people.

The research quoted in this article is from: Kim, Hye-young and McGill, Ann L. (2025) AI-induced dehumanization. Journal of Consumer Psychology, 35 (3). 363 - 381. ISSN 1057-7408.

Thursday 24 July 2025